* update * [Fix] Fix HRFormer log link * [Feature] Add Application 'Just dance' (#2528) * [Docs] Add advanced tutorial of implement new model. (#2539) * [Doc] Update img (#2541) * [Feature] Support MotionBERT (#2482) * [Fix] Fix demo scripts (#2542) * [Fix] Fix Pose3dInferencer keypoint shape bug (#2543) * [Enhance] Add notifications when saving visualization results (#2545) * [Fix] MotionBERT training and flip-test (#2548) * [Docs] Enhance docs (#2555) * [Docs] Fix links in doc (#2557) * [Docs] add details (#2558) * [Refactor] 3d human pose demo (#2554) * [Docs] Update MotionBERT docs (#2559) * [Refactor] Update the arguments of 3d inferencer to align with the demo script (#2561) * [Enhance] Combined dataset supports custom sampling ratio (#2562) * [Docs] Add MultiSourceSampler docs (#2563) * [Doc] Refine docs (#2564) * [Feature][MMSIG] Add UniFormer Pose Estimation to Projects folder (#2501) * [Fix] Check the compatibility of inferencer's input/output (#2567) * [Fix]Fix 3d visualization (#2565) * [Feature] Add bear example in just dance (#2568) * [Doc] Add example and openxlab link for just dance (#2571) * [Fix] Configs' paths of VideoPose3d (#2572) * [Docs] update docs (#2573) * [Fix] Fix new config bug in train.py (#2575) * [Fix] Configs' of MotionBERT (#2574) * [Enhance] Normalization option in 3d human pose demo and inferencer (#2576) * [Fix] Fix the incorrect labels for training vis_head with combined datasets (#2550) * [Enhance] Enhance 3dpose demo and docs (#2578) * [Docs] Enhance Codecs documents (#2580) * [Feature] Add DWPose distilled WholeBody RTMPose models (#2581) * [Docs] Add deployment docs (#2582) * [Fix] Refine 3dpose (#2583) * [Fix] Fix config typo in rtmpose-x (#2585) * [Fix] Fix 3d inferencer (#2593) * [Feature] Add a simple visualize api (#2596) * [Feature][MMSIG] Support badcase analyze in test (#2584) * [Fix] fix bug in flip_bbox with xyxy format (#2598) * [Feature] Support ubody dataset (2d keypoints) (#2588) * [Fix] Fix visualization bug in 3d pose (#2594) * [Fix] Remove use-multi-frames option (#2601) * [Enhance] Update demos (#2602) * [Enhance] wholebody support openpose style visualization (#2609) * [Docs] Documentation regarding 3d pose (#2599) * [CodeCamp2023-533] Migration Deepfashion topdown heatmap algorithms to 1.x (#2597) * [Fix] fix badcase hook (#2616) * [Fix] Update dataset mim downloading source to OpenXLab (#2614) * [Docs] Update docs structure (#2617) * [Docs] Refine Docs (#2619) * [Fix] Fix numpy error (#2626) * [Docs] Update error info and docs (#2624) * [Fix] Fix inferencer argument name (#2627) * [Fix] fix links for coco+aic hrnet (#2630) * [Fix] fix a bug when visualize keypoint indices (#2631) * [Docs] Update rtmpose docs (#2642) * [Docs] update README.md (#2647) * [Docs] Add onnx of RTMPose models (#2656) * [Docs] Fix mmengine link (#2655) * [Docs] Update QR code (#2653) * [Feature] Add DWPose (#2643) * [Refactor] Reorganize distillers (#2658) * [CodeCamp2023-259]Document Writing: Advanced Tutorial - Custom Data Augmentation (#2605) * [Docs] Fix installation docs(#2668) * [Fix] Fix expired links in README (#2673) * [Feature] Support multi-dataset evaluation (#2674) * [Refactor] Specify labels to pack in codecs (#2659) * [Refactor] update mapping tables (#2676) * [Fix] fix link (#2677) * [Enhance] Enable CocoMetric to get ann_file from MessageHub (#2678) * [Fix] fix vitpose pretrained ckpts (#2687) * [Refactor] Refactor YOLOX-Pose into mmpose core package (#2620) * [Fix] Fix typo in COCOMetric(#2691) * [Fix] Fix bug raised by changing bbox_center to input_center (#2693) * [Feature] Surpport EDPose for inference(#2688) * [Refactor] Internet for 3d hand pose estimation (#2632) * [Fix] Change test batch_size of edpose to 1 (#2701) * [Docs] Add OpenXLab Badge (#2698) * [Doc] fix inferencer doc (#2702) * [Docs] Refine dataset config tutorial (#2707) * [Fix] modify yoloxpose test settings (#2706) * [Fix] add compatibility for argument `return_datasample` (#2708) * [Feature] Support ubody3d dataset (#2699) * [Fix] Fix 3d inferencer (#2709) * [Fix] Move ubody3d dataset to wholebody3d (#2712) * [Refactor] Refactor config and dataset file structures (#2711) * [Fix] give more clues when loading img failed (#2714) * [Feature] Add demo script for 3d hand pose (#2710) * [Fix] Fix Internet demo (#2717) * [codecamp: mmpose-315] 300W-LP data set support (#2716) * [Fix] Fix the typo in YOLOX-Pose (#2719) * [Feature] Add detectors trained on humanart (#2724) * [Feature] Add RTMPose-Wholebody (#2721) * [Doc] Fix github action badge in README (#2727) * [Fix] Fix bug of dwpose (#2728) * [Feature] Support hand3d inferencer (#2729) * [Fix] Fix new config of RTMW (#2731) * [Fix] Align visualization color of 3d demo (#2734) * [Fix] Refine h36m data loading and add head_size to PackPoseInputs (#2735) * [Refactor] Align test accuracy for AE (#2737) * [Refactor] Separate evaluation mappings from KeypointConverter (#2738) * [Fix] MotionbertLabel codec (#2739) * [Fix] Fix mask shape (#2740) * [Feature] Add training datasets of RTMW (#2743) * [Doc] update RTMPose README (#2744) * [Fix] skip warnings in demo (#2746) * Bump 1.2 (#2748) * add comments in dekr configs (#2751) --------- Co-authored-by: Peng Lu <penglu2097@gmail.com> Co-authored-by: Yifan Lareina WU <mhsj16lareina@gmail.com> Co-authored-by: Xin Li <7219519+xin-li-67@users.noreply.github.com> Co-authored-by: Indigo6 <40358785+Indigo6@users.noreply.github.com> Co-authored-by: 谢昕辰 <xiexinch@outlook.com> Co-authored-by: tpoisonooo <khj.application@aliyun.com> Co-authored-by: zhengjie.xu <jerryxuzhengjie@gmail.com> Co-authored-by: Mesopotamia <54797851+yzd-v@users.noreply.github.com> Co-authored-by: chaodyna <li0331_1@163.com> Co-authored-by: lwttttt <85999869+lwttttt@users.noreply.github.com> Co-authored-by: Kanji Yomoda <Kanji.yy@gmail.com> Co-authored-by: LiuYi-Up <73060646+LiuYi-Up@users.noreply.github.com> Co-authored-by: ZhaoQiiii <102809799+ZhaoQiiii@users.noreply.github.com> Co-authored-by: Yang-ChangHui <71805205+Yang-Changhui@users.noreply.github.com> Co-authored-by: Xuan Ju <89566272+juxuan27@users.noreply.github.com> |

||

|---|---|---|

| .. | ||

| configs | ||

| models | ||

| README.md | ||

README.md

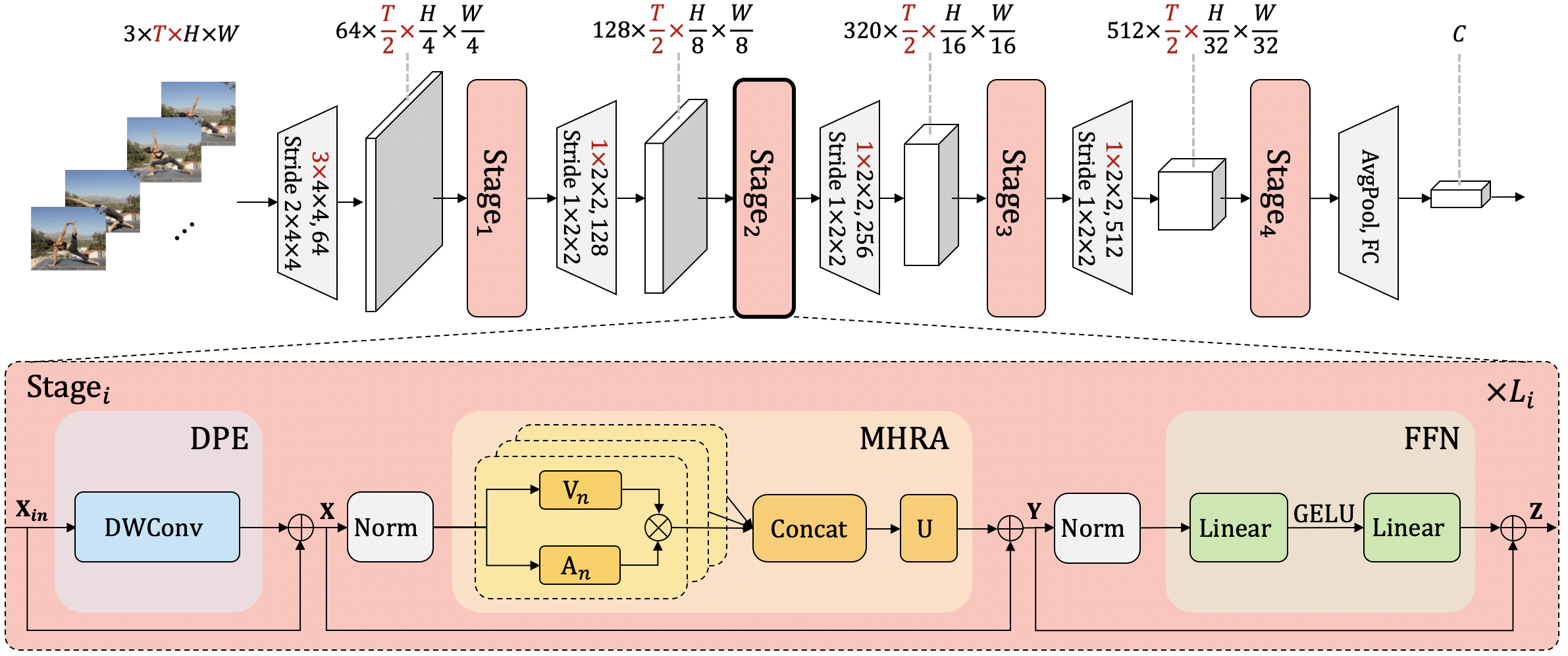

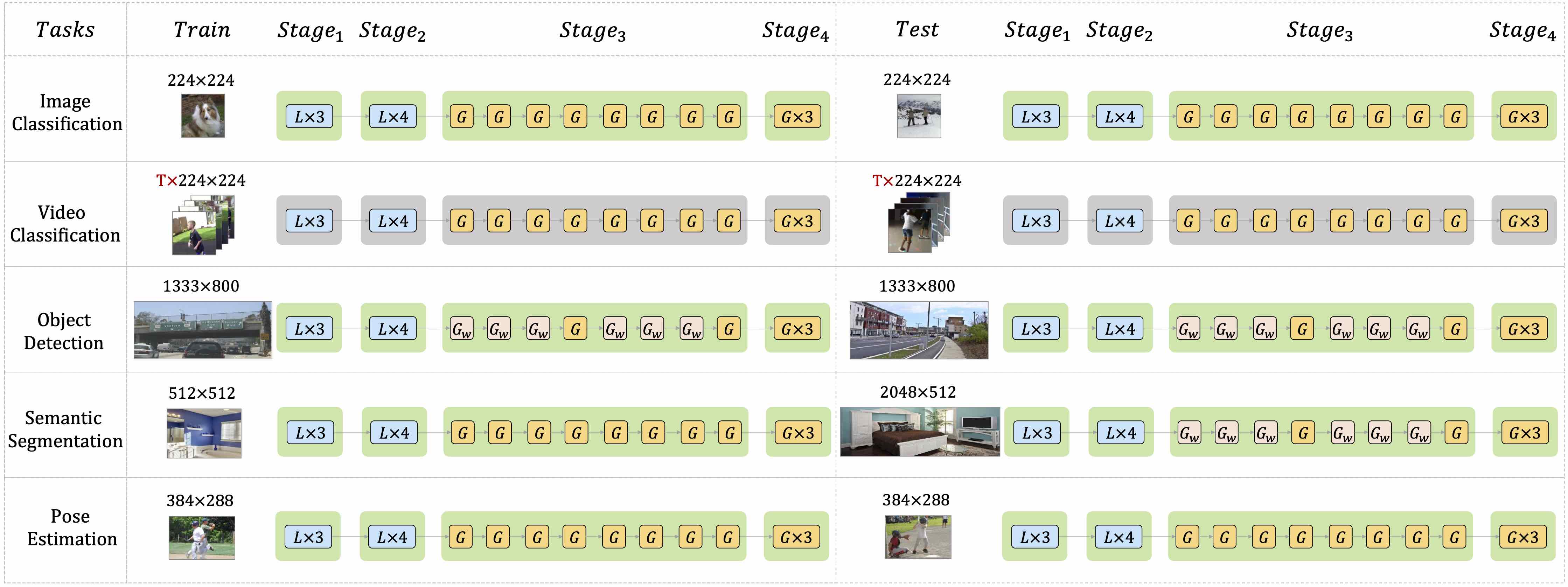

Pose Estion with UniFormer

This project implements a topdown heatmap based human pose estimator, utilizing the approach outlined in UniFormer: Unifying Convolution and Self-attention for Visual Recognition (TPAMI 2023) and UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning (ICLR 2022).

Usage

Preparation

- Setup Development Environment

- Python 3.7 or higher

- PyTorch 1.6 or higher

- MMEngine v0.6.0 or higher

- MMCV v2.0.0rc4 or higher

- MMDetection v3.0.0rc6 or higher

- MMPose v1.0.0rc1 or higher

All the commands below rely on the correct configuration of PYTHONPATH, which should point to the project's directory so that Python can locate the module files. In uniformer/ root directory, run the following line to add the current directory to PYTHONPATH:

export PYTHONPATH=`pwd`:$PYTHONPATH

- Download Pretrained Weights

To either run inferences or train on the uniformer pose estimation project, you have to download the original Uniformer pretrained weights on the ImageNet1k dataset and the weights trained for the downstream pose estimation task. The original ImageNet1k weights are hosted on SenseTime's huggingface repository, and the downstream pose estimation task weights are hosted either on Google Drive or Baiduyun. We have uploaded them to the OpenMMLab download URLs, allowing users to use them without burden. For example, you can take a look at td-hm_uniformer-b-8xb128-210e_coco-256x192.py, the corresponding pretrained weight URL is already here and when the training or testing process starts, the weight will be automatically downloaded to your device. For the downstream task weights, you can get their URLs from the benchmark result table.

Inference

We have provided a inferencer_demo.py with which developers can utilize to run quick inference demos. Here is a basic demonstration:

python demo/inferencer_demo.py $INPUTS \

--pose2d $CONFIG --pose2d-weights $CHECKPOINT \

[--show] [--vis-out-dir $VIS_OUT_DIR] [--pred-out-dir $PRED_OUT_DIR]

For more information on using the inferencer, please see this document.

Here's an example code:

python demo/inferencer_demo.py tests/data/coco/000000000785.jpg \

--pose2d projects/uniformer/configs/td-hm_uniformer-s-8xb128-210e_coco-256x192.py \

--pose2d-weights https://download.openmmlab.com/mmpose/v1/projects/uniformer/top_down_256x192_global_small-d4a7fdac_20230724.pth \

--vis-out-dir vis_results

Then you will find the demo result in vis_results folder, and it may be similar to this:

Training and Testing

- Data Preparation

Prepare the COCO dataset according to the instruction.

- To Train and Test with Single GPU:

python tools/test.py $CONFIG --auto-scale-lr

python tools/test.py $CONFIG $CHECKPOINT

- To Train and Test with Multiple GPUs:

bash tools/dist_train.sh $CONFIG $NUM_GPUs --amp

bash tools/dist_test.sh $CONFIG $CHECKPOINT $NUM_GPUs --amp

Results

Here is the testing results on COCO val2017:

| Model | Input Size | AP | AP50 | AP75 | AR | AR50 | Download |

|---|---|---|---|---|---|---|---|

| UniFormer-S | 256x192 | 74.0 | 90.2 | 82.1 | 79.5 | 94.1 | model | log |

| UniFormer-S | 384x288 | 75.9 | 90.6 | 83.0 | 81.0 | 94.3 | model | log |

| UniFormer-S | 448x320 | 76.2 | 90.6 | 83.2 | 81.4 | 94.4 | model | log |

| UniFormer-B | 256x192 | 75.0 | 90.5 | 83.0 | 80.4 | 94.2 | model | log |

| UniFormer-B | 384x288 | 76.7 | 90.8 | 84.1 | 81.9 | 94.6 | model | log |

| UniFormer-B | 448x320 | 77.4 | 91.0 | 84.4 | 82.5 | 94.9 | model | log |

Here is the testing results on COCO val 2017 from the official UniFormer Pose Estimation repository for comparison:

| Backbone | Input Size | AP | AP50 | AP75 | ARM | ARL | AR | Model | Log |

|---|---|---|---|---|---|---|---|---|---|

| UniFormer-S | 256x192 | 74.0 | 90.3 | 82.2 | 66.8 | 76.7 | 79.5 | ||

| UniFormer-S | 384x288 | 75.9 | 90.6 | 83.4 | 68.6 | 79.0 | 81.4 | ||

| UniFormer-S | 448x320 | 76.2 | 90.6 | 83.2 | 68.6 | 79.4 | 81.4 | ||

| UniFormer-B | 256x192 | 75.0 | 90.6 | 83.0 | 67.8 | 77.7 | 80.4 | ||

| UniFormer-B | 384x288 | 76.7 | 90.8 | 84.0 | 69.3 | 79.7 | 81.4 | ||

| UniFormer-B | 448x320 | 77.4 | 91.1 | 84.4 | 70.2 | 80.6 | 82.5 |

Note:

- All the original models are pretrained on ImageNet-1K without Token Labeling and Layer Scale, as mentioned in the official README . The official team has confirmed that Token labeling can largely improve the performance of the downstream tasks. Developers can utilize the implementation by themselves.

- The original implementation did not include the freeze BN in the backbone. The official team has confirmed that this can improve the performance as well.

- To avoid running out of memory, developers can use

torch.utils.checkpointin theconfig.pyby settinguse_checkpoint=Trueandcheckpoint_num=[0, 0, 2, 0] # index for using checkpoint in every stage - We warmly welcome any contributions if you can successfully reproduce the results from the paper!

Citation

If this project benefits your work, please kindly consider citing the original papers:

@misc{li2022uniformer,

title={UniFormer: Unifying Convolution and Self-attention for Visual Recognition},

author={Kunchang Li and Yali Wang and Junhao Zhang and Peng Gao and Guanglu Song and Yu Liu and Hongsheng Li and Yu Qiao},

year={2022},

eprint={2201.09450},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{li2022uniformer,

title={UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning},

author={Kunchang Li and Yali Wang and Peng Gao and Guanglu Song and Yu Liu and Hongsheng Li and Yu Qiao},

year={2022},

eprint={2201.04676},

archivePrefix={arXiv},

primaryClass={cs.CV}

}